For my last Code Club of the term we embraced Christmas and had a bash at coding “The 12 Days of Christmas” on our Micro:bits. Using the MakeCode blocks, the code can gets a bit busy, but stick with it and you’ll have it as an annoying ear worm in no time!

Continue reading

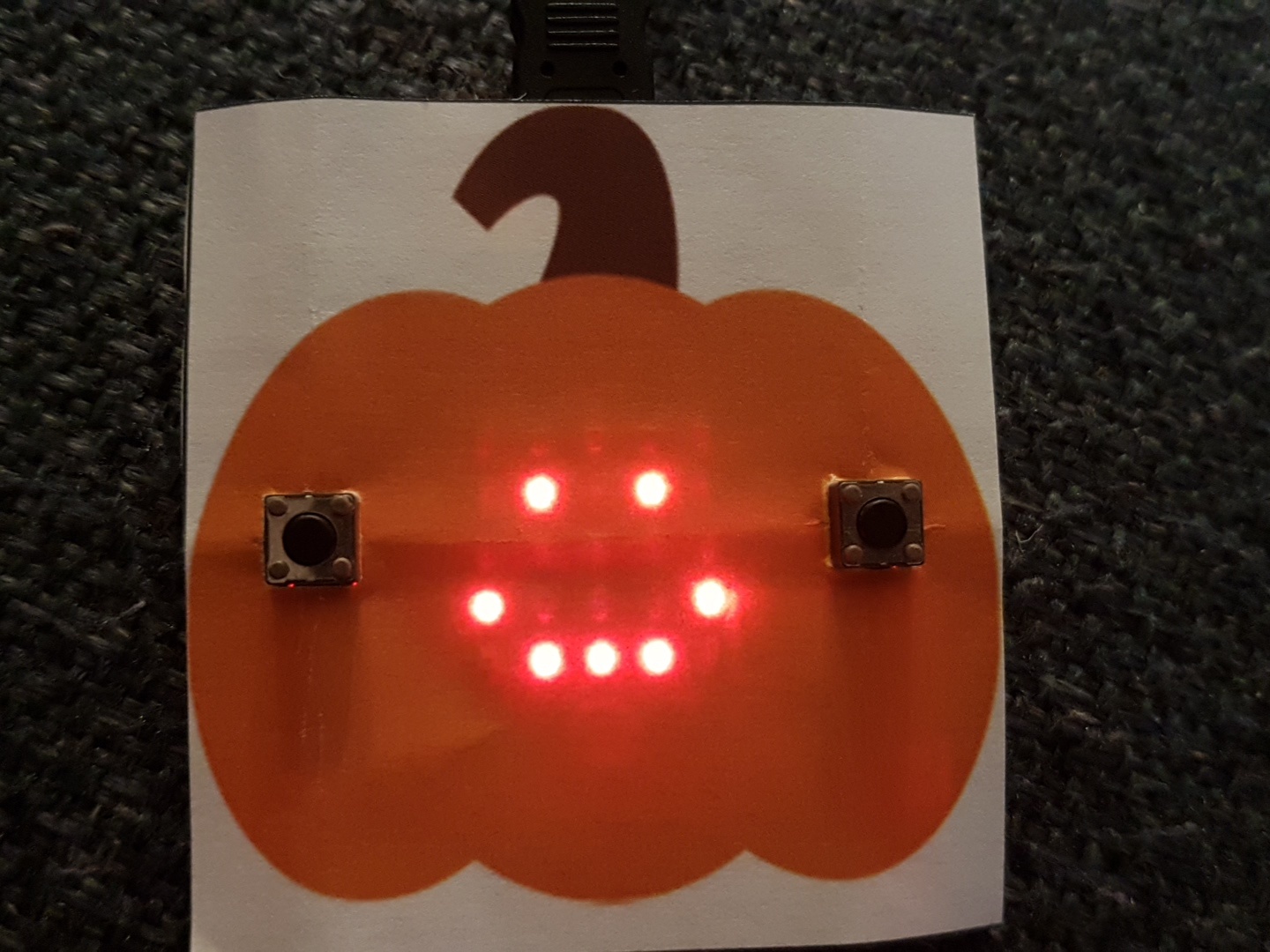

Micro:bit Pumpkin

As I write this post it’s Halloween in the UK. I run a Code Club at a local school, and today is club day. So why not combine the two with some digital pumpkin carving!

All you need is to print out a pumpkin shape to the size of a Microbit on some standard printer paper. The LEDs are bright enough to shine through to display whatever pattern or shape you make.

The code can be as simple or as adventurous as you like. Use the “show icon” block for one of the predefined shapes. Use “show LEDs” to make your own. Attach different shapes to different actions, or even animate it.

Here’s the Word Template I created. The original pumpkin clipart is from TechFlourish.com and to the best of my knowledge is free for personal use.

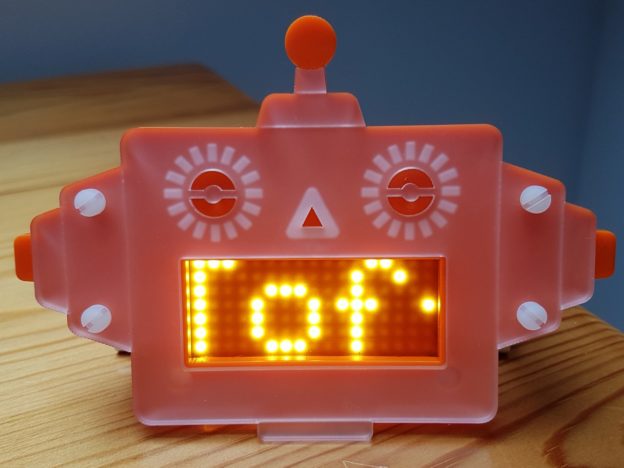

LEGO Batman Clock Hack

Holy Batman

Inspired by Dan Aldred‘s LEGO Clock hacks (http://www.tecoed.co.uk/darth-beats.html and http://www.tecoed.co.uk/yoda-tweets.html) I picked up a broken Batman clock from eBay for some hacking shenanigans. I had several aims, based on the great work of Dan before me

- Add a Pimoroni Scroll pHAT HD, which would allow both text and graphics to be displayed

- Attempt to retain the existing button board

- See if the on board piezo was usable

- Light the white eyes behind the cowl

Scroll Bot and Pong!

A new, wireless, PiZero has been heralded in, and with it a set of new kits from Pimoroni. All the kits are things of beauty, as you would expect from the Pirates of Sheffield, but the Scroll Bot caught my eye for two reasons.

- It’s an absolutley awesome looking kit

- I was building a Pi Zero based badge for the 5th Raspberry Pi Birthday

Embedding a PiZero in a Robosapien

Summary

I embedded a PiZero into a Robosapien v1 and can now control it over Wifi using Python